Project goal

Anything connecting to a secure internal network should “double hop” through a DMZ.

I have an Exchange lab for my mail, but incoming mail never directly talks to it. Imagine if the HELO 0xBADDCAFE SMTP command caused a buffer overflow in Exchange 2022. By speaking to a Postfix box in a separate network, you’re far less likely to compromise a sensitive box.

Why stop there? By fronting services like NGINX with HAProxy in a separate net, we massively improve our security posture.

Right now, you’re connecting to blog.abctaylor.com exactly as above.

Part 1: haproxy.cfg?

We won’t ever touch this file. Just get a Linux server running and call it something, like proxy1. All config will happen via Puppet.

Part 2: Puppet config

2A: The Puppetfile

We need something like the below. Modify yours appropriately and run r10k puppetfile install on your Puppet box.

forge "http://forge.puppetlabs.com"

# Puppet

mod 'puppet-systemd', '3.5.1'

mod 'puppet-firewalld', '4.4.0'

mod 'puppet-nginx', '3.3.0'

mod 'puppet-cron', '3.0.0'

mod 'puppet-letsencrypt', '10.0.0'

# Puppetlabs

mod 'puppetlabs-stdlib', '8.1.0'

mod 'puppetlabs-concat', '6.4.0'

mod 'puppetlabs-inifile', '5.2.0'

mod 'puppetlabs-transition', '0.1.3'

mod 'puppetlabs-haproxy', '6.2.1'2B: Defining a letsencrypt.pp manifest

We need a reusable way to repeatedly make TLS certificates. I propose you put a manifest file called letsencrypt.pp in your DMZ environment like so:

# Creates LetsEncrpt certificates and stores them ready for HAProxy

define letsencrypt::multi_cert (

$sans = false

) {

if $sans {

$certificates = [$name] + $sans

} else {

$certificates = [$name]

}

letsencrypt::certonly { $name:

domains => $certificates,

manage_cron => true,

cron_hour => [0,12],

cron_minute => '30',

plugin => 'webroot',

webroot_paths => ['/var/www/acme/'],

cron_success_command => "cat /etc/letsencrypt/live/${name}/fullchain.pem /etc/letsencrypt/live/${name}/privkey.pem > /etc/ssl/private/${name}-fullchain-with-privkey.pem && /bin/systemctl reload haproxy.service",

}

}

Let’s see what this does:

- We assume no SANs, but provide an array for adding up to 100 SANs (LetsEncrypt limit)

- We have a cron job that runs twice a day

- After a successful certbot task, we concat the full chain and private key into a single file for HAProxy to read (in

/etc/ssl/private)

2C: site.pp config

We need to get a lot of things perfect here:

- intercepting

/.well-known/acme-challengepaths and passing it to a special NGINX server running in the DMZ that Certbot uses forwebrootverification - allow HTTP and HTTPS traffic through

firewalld/ufwetc (I use the former) - define the certs we want for LetsEncrypt

- configure

haproxy.cfgdefaults to a modern standard - configure

haproxy.cfgbackends and frontends - set up HAProxy maps for our domains

The below code blocks can be copy-pasted together, but are separated for clarity.

You probably only want this code to apply to your proxy server. So make sure the below block only runs for node /^proxy\d+.dmz.foo.com or something like that:

node /^proxy\d+.dmz.foo.com$/ {

# below for other generic config I want all my servers to have, e.g. SSH config

include dmz_classNow get some firewall rules in place:

# firewalls

firewalld_rich_rule { 'Accept HTTP':

ensure => present,

zone => 'public',

source => '0.0.0.0/0', # allow from Internet as this is a DMZ load balancer

service => 'http',

action => 'accept',

}

firewalld_rich_rule { 'Accept HTTPS':

ensure => present,

zone => 'public',

source => '0.0.0.0/0', # allow from Internet as this is a DMZ load balancer

service => 'https',

action => 'accept',

}

# allows accessing port 81 for certbot (certbot only speaks on 80 to the load balancer, but LB needs to fwd to nginx @ 81)

firewalld_custom_service {'certbot-proxy':

ensure => present,

ports => [{'port' => '81', 'protocol' => 'tcp'}]

}Next, we’re going to start using letsencrypt.pp we made earlier. Consider changing the ACME server to the staging server for testing.

# certbot

class { 'letsencrypt' :

email => 'certificate-alerts@foo.com',

config => {

'server' => 'https://acme-v02.api.letsencrypt.org/directory', #production

},

}

letsencrypt::multi_cert {'abctaylor.com':

sans => [

'www.abctaylor.com',

'www-qa.abctaylor.com',

'owa.abctaylor.com',

'mail.abctaylor.com',

'cv.abctaylor.com',

'blog.abctaylor.com',

],

}Now we need to get NGINX ready to server webroot verification requests.

- You’ll later see a test index.html page at

http://proxy1/.well-known/acme-challenge. Technically this does nothing, but tells us our HAProxy setup works. - We run NGINX on port 81 as 80 is already taken by HAProxy (which will pass the ACME challenges to NGINX on 81, where Certbot will handle stuff from

/var/www/acme. Make sure this directory is writeable by Certbot.

# nginx - so certbot can see a working page for abctaylor.com/.well-known/acme-challenge

include nginx

# Nginx stuff

nginx::resource::server { 'certbot':

server_name => ['_'],

listen_port => 81, # haproxy forwards to nginx, which is listening on port 81. haproxy owns the bind on 80 and does url routing.

www_root => '/var/www/acme/',

}

# create nginx static file so the page returns status code 200

file { [

'/var/www/',

'/var/www/acme',

]:

ensure => directory,

}

$str = "

<html>

<head><title>ACME Response</title></head>

<body><p>Hello, ACME. Have a nice day. If you are not ACME and are a human, I also hope you have a nice day.</p></body>

</html>

"

file { '/var/www/acme/.well-known/acme-challenge/index.html':

ensure => present,

content => $str,

}

Next, get HAProxy ready. Let’s start with defaults and global options. I imagine most of the below will work for most readers.

# haproxy

class { 'haproxy':

global_options => {

'log' => "${::ipaddress} local0",

'chroot' => '/var/lib/haproxy',

'pidfile' => '/var/run/haproxy.pid',

'maxconn' => '4000',

'user' => 'haproxy',

'group' => 'haproxy',

'daemon' => '',

'stats' => 'socket /var/lib/haproxy/stats',

'ca-base' => '/etc/ssl/certs',

'crt-base' => '/etc/ssl/private',

'ssl-default-bind-options' => ['no-sslv3', 'no-tlsv10','no-tlsv11'],

},

defaults_options => {

'log' => 'global',

'stats' => 'enable',

'option' => 'redispatch',

'retries' => '3',

'timeout' => [

'http-request 10s',

'queue 1m',

'connect 10s',

'client 1m',

'server 1m',

'check 10s'

],

'maxconn' => '8000'

},

}Frontends next. We need two, one for http and another for https.

- The former intercepts ACME challenges and sends them to a backend we’ll make later called

backend_certbot. - The latter is for all https traffic and chooses a backend based on a map file for our domains

- The

reqaddlines will stop you getting mixed content blocked in web browsers.

include ::haproxy

haproxy::frontend { 'frontend_http':

ipaddress => '0.0.0.0',

ports => '80',

mode => 'http',

options => {

'reqadd' => 'X-Forwarded-Proto:\ https',

'acl is_certbot' => 'url_beg /.well-known/acme-challenge',

'use_backend backend_certbot' => 'if is_certbot'

}

}

haproxy::frontend { 'frontend_https':

bind => {'*:443' => ['ssl', 'crt', '/etc/ssl/private/']},

mode => 'http',

options => {

'use_backend' => '%[base,map_beg(/etc/haproxy/domains-to-backends.map,backend_default)]',

'reqadd' => 'X-Forwarded-Proto:\ https'

}

}Now for the promised map file. For simplicity’s sake, we’ll use one backend called backend_default:

haproxy::mapfile { 'domains-to-backends':

ensure => 'present',

mappings => [

{ 'abctaylor.com' => 'backend_default' },

{ 'www.abctaylor.com' => 'backend_default' },

{ 'blog.abctaylor.com' => 'backend_default' },

],

}Next, define the two backends (one for Certbot, and one for everything else)

haproxy::backend { 'backend_default':

mode => 'http',

options => {

'option' => [

],

'balance' => 'roundrobin',

},

}

haproxy::backend { 'backend_certbot':

mode => 'http',

options => {

'option' => [

'httpchk HEAD / HTTP/1.1\r\nHost:\ localhost',

],

'balance' => 'roundrobin',

},

}

Finally, define the balancermembers and close the node block with the last }.

balancermember_web2_lon_core_foo_comis just the name of my internal NGINX server I’m protecting.listening_servicemust correspond to a backend with the same name- we don’t want a

checkoption for the Certbot backend - we run the Certbot backend (NGINX) on port 81 because 80 is already taken by HAProxy (LetsEncrypt still speaks to us on port 80 for Certbot verification, as we intercept it in the default http frontend and pass it back to port 81 behind the scenes)

haproxy::balancermember { 'balancermember_web2_lon_core_foo_com':

listening_service => 'backend_default',

ports => '80',

server_names => ['web2-lon.core.arcza.net'],

ipaddresses => ['web2-lon.core.arcza.net'],

options => 'check',

}

haproxy::balancermember { "balancermember_${facts['fqdn']}":

listening_service => 'backend_certbot',

ports => '81',

server_names => [$facts['fqdn']],

ipaddresses => [$facts['fqdn']],

}

}Part 3: networking

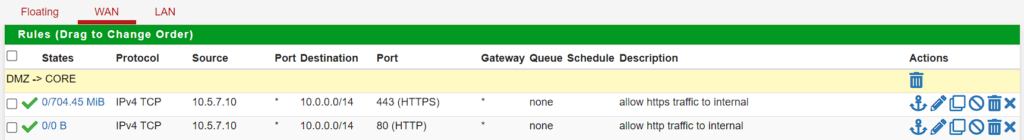

This is too specific to tell you what to do. I have two firewalls:

- fwred: it faces the Internet on 82.71.78.0/29

- fwgreen: it faces the LAN of fwred

The red firewall performs NAT for any TCP request on port 80 and 443 and sends it to my DMZ proxy:

The green firewall then allows traffic from 10.5.7.10 (the load balancer) to anywhere in the core network on 80 and 443. This could be further locked down if desired.

Testing

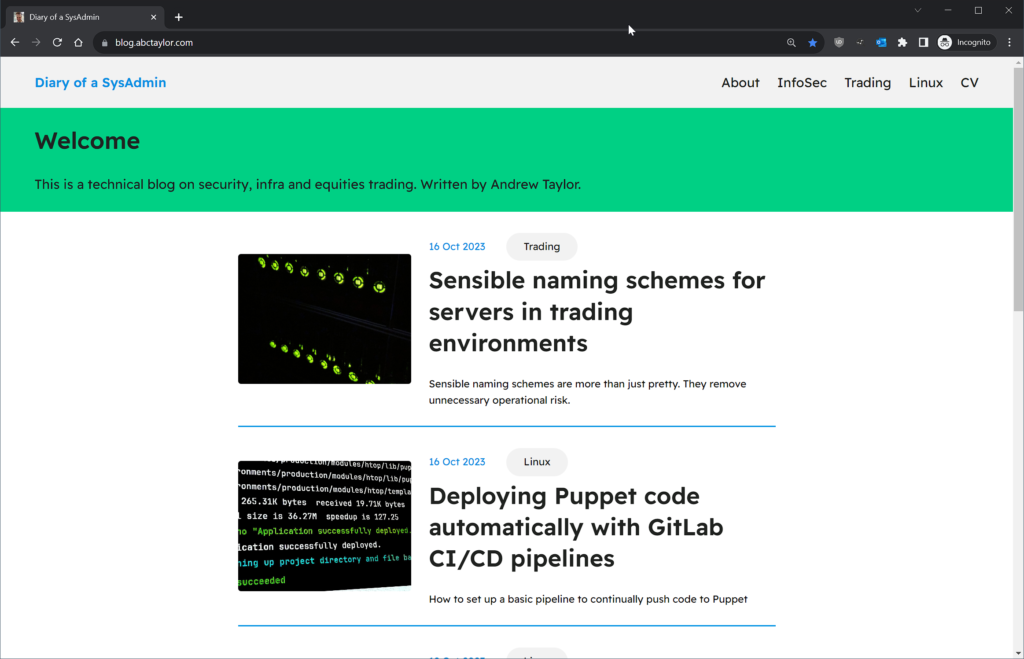

My blog loads:

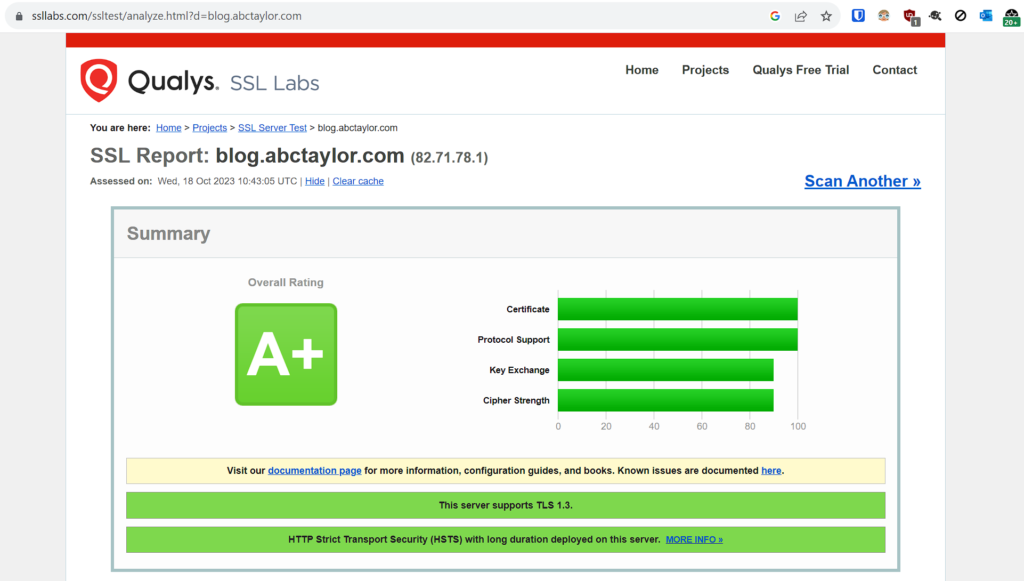

I also get an A+ rating on Qualys SSL Labs:

This is due to using HSTS. If you want this, change your backend like so to set Strict-Transport-Security and submit your domain via https://hstspreload.org.

haproxy::backend { 'backend_default':

mode => 'http',

options => {

'http-response' => 'set-header Strict-Transport-Security "max-age=31536000; includeSubDomains; preload"',

'balance' => 'roundrobin',

},

}