Goal

We’ll create a full pipeline for any push on master to deploy Puppet code to a production Puppet server instantly. We’ll need a special local user on the Puppet server, cut it a keypair and set up GitLab to do the rsync with a fresh container each time.

The environment

- a Puppet server

- a GitLab CE server

- a bunch of clients that pull code at 3am

Puppet server config

- Add a user with

useradd. I’ll usel-gitlabcifor this. chownall of/etc/puppetlabs/codewithl-gitlabci:puppet. This allows our user to write here, and thepuppetsoftware account to continue to run code.suinto your new user’s account.- Cut a key with

ssh-keygen -t ecdsa(elliptic curves are faster than RSA) - Get the base64 “version” of the private key with

base64 -w0and copy it to your clipboard.

GitLab server config

- Add a CI/CD variable in Settings > CI/CD > Variables called

SSH_PRIVATE_KEYand paste in the base64 version from above. Protect and mask the variable.

- Set up a runner if you don’t have any. Runners are units of computing that execute pipeline jobs. For example a shell session on the GitLab server itself (not recommended) or a container that’s spun up and destroyed (recommended). You want to see a green dot before you proceed.

- Add a

gitlab-ci.ymlwith just one stage (deploy). Breaking down the key steps:- we get a simple container going (e.g.

alpine) - we add the

opensshandrsyncpackages to the container - we test that ssh works

- we go to the container’s root and make a .ssh directory

- we decode our base64

SSH_PRIVATE_KEYvariable back to normal and add it to our ssh environment - we turn off strict host key checking (discussion on this below)

- finally we rsync our code

- we get a simple container going (e.g.

stages: # List of stages for jobs, and their order of execution

- deploy

deploy-job: # This job runs in the deploy stage.

stage: deploy

environment: production

image: alpine:latest

before_script:

- apk update && apk add openssh-client rsync

- eval $(ssh-agent -s)

- mkdir /root/.ssh

- ssh-add <(echo "$SSH_PRIVATE_KEY" | base64 -d)

- echo "StrictHostKeyChecking no" >> ~/.ssh/config

script:

- echo "Deploying application..."

- rsync -athv --delete ./ l-gitlabci@puppet2-lon.ad.example.com:/etc/puppetlabs/code

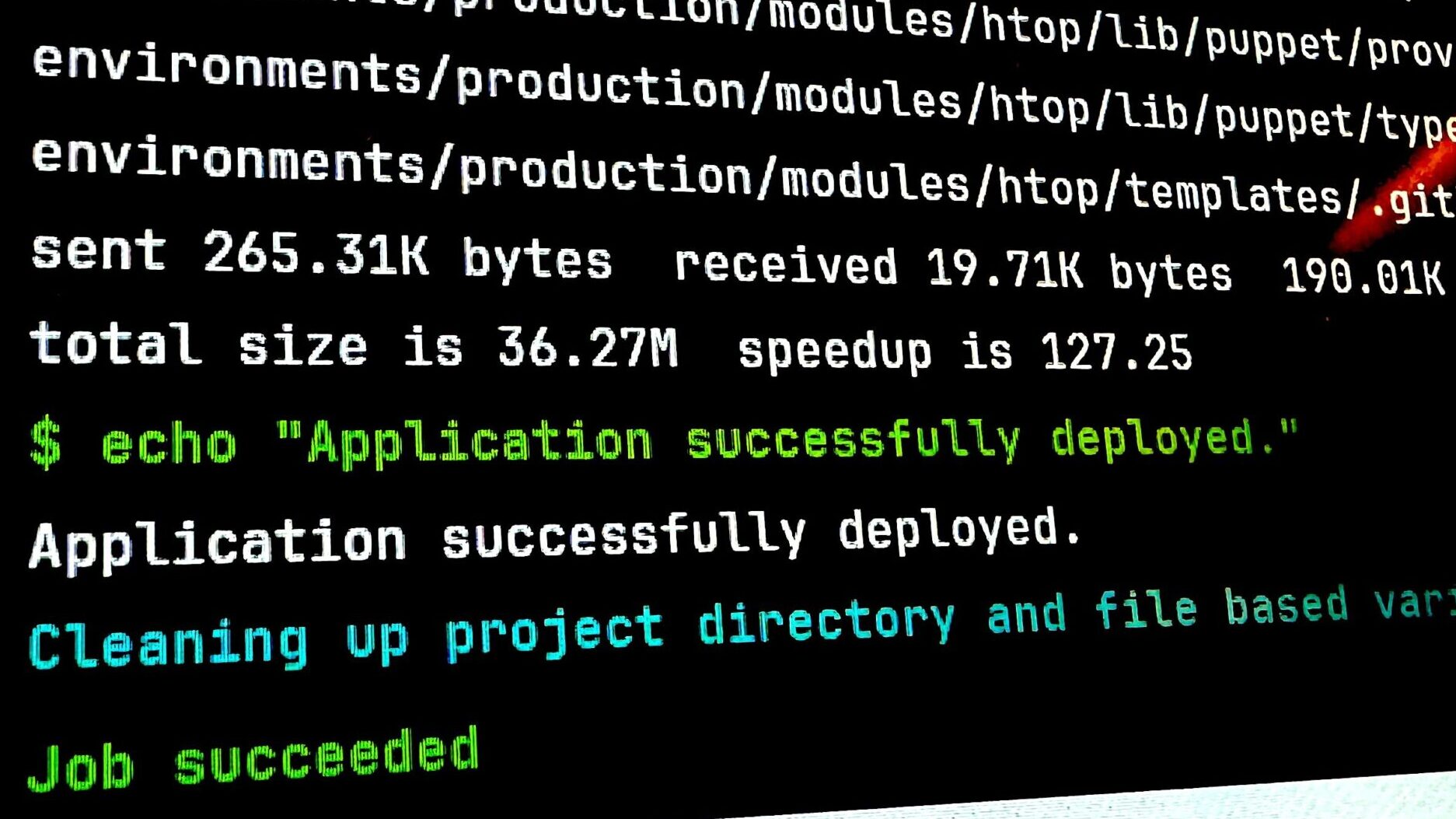

- echo "Application successfully deployed."Testing

Well… just push to master:

Not working for you?

- Does

/etc/ssh/sshd_configon the Puppet server allowPubkeyAuthentication? - Can you

ssh -vvv l-gitlabci@puppetserverfrom a test server with a username with the same name, and theid_ecdsakey in ~/.ssh/? - Is your rsync command correct?

-athvflags are more than enough.aimpliesr. - Did you copy the private key (correct) or the public key (incorrect) to GitLab?

- Did you pipe the private key into the base64 encoder? (

cat id_ecdsa | base64 -w0 - Firewalls? DNS? Routing? SELinux?

Notes

When do the clients get the code?

Whenever they call puppet agent.

Don’t try engineer a pipeline to run the agent on the entire environment.

I’d suggest either:

- all servers pull code at 3am local time

- servers pull code based on a stability day (e.g. some servers are “Tuesday” servers, which pull on Tuesdays)

If a change is catastrophic, you haven’t killed all your infra and have some servers you can connect to for recovery.

Why no host key checking?

Yes, you could:

- add an

SSH_FINGERPRINTvariable, or - curl a list of known host fingerprints from an internal web server

This marginally improves security, with a big downside risk. This is Puppet which is possibly managing all your infra.

In an emergency, faffing around with a host key issue when I’m trying to deploy to a new puppet server with a different host key is the last thing I want to have to remember to change. I actively discourage host key checking here.

GitLab environments?

A great idea would be to match Puppet environments with GitLab environments. I’d suggest having a branch for each environment. I’m not doing this yet.